安装

国内常见云平台:

使用 CentOS 7.9

# 移除旧版本docker

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

# 配置docker yum源。

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装 最新 docker

sudo yum install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

# 启动& 开机启动docker; enable + start 二合一

systemctl enable docker --now

# 配置加速源

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": [

"https://docker.m.daocloud.io",

"https://docker.imgdb.de",

"https://docker-0.unsee.tech",

"https://docker.hlmirror.com",

"https://docker.1ms.run",

"https://func.ink",

"https://lispy.org",

"https://docker.xiaogenban1993.com"

]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker基本命令

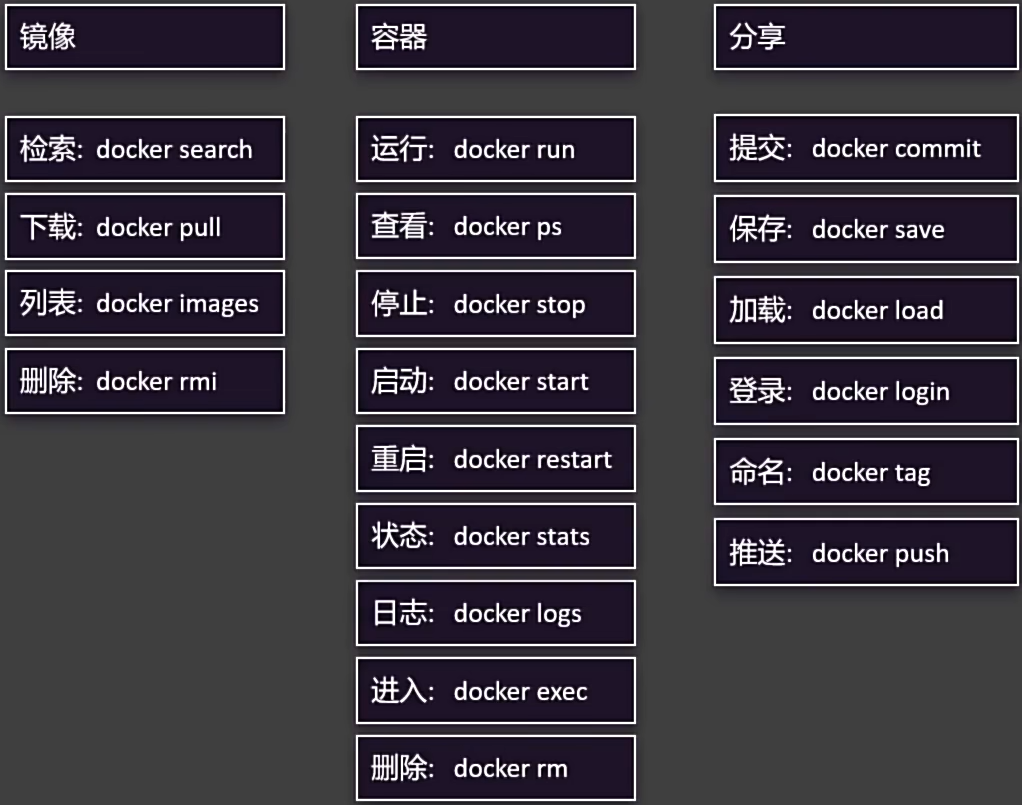

#查看运行中的容器

docker ps

#查看所有容器

docker ps -a

#搜索NGINX镜像

docker search nginx

············↓↓下载镜像↓↓············

#下载NGINX镜像

docker pull nginx

#下载指定NGINX版本镜像

docker pull nginx:1.26.0

#查看所有镜像

docker images

#删除指定IMAGE ID的镜像

docker rmi e784f4560448

#运行一个新容器(不指定版本会默认下载最新版本并运行)

docker run nginx

#停止容器(指定容器名称)

docker stop jovial_mcclintock

············↓↓启动容器↓↓············

#启动容器(指定容器CONTAINER ID启动)

docker start 9cf1aa7f9b27

#重启容器(ID前几个字母)

docker restart 9cf

#查看容器资源占用情况

docker stats 9cf

#查看容器日志

docker logs 9cf

#删除指定容器

docker rm 9cf

#强制删除指定容器

docker rm -f 9cf

············↓↓进入容器,修改文件↓↓············

# 后台启动容器

docker run -d --name mynginx nginx:1.26.0

# 后台启动并暴露端口(端口映射80映射到88)

docker run -d --name mynginx -p 88:80 nginx:1.26.0

# 进入容器内部(-it 以交互模式进入)

docker exec -it mynginx /bin/bash

#更改index.html内容

root@c7e8c8b9cc31:/usr/share/nginx/html# echo "<h1>Hello,Docker.</h1>" > in

dex.html

#退出容器

root@c7e8c8b9cc31:/# exit

············↓↓制作并保存镜像↓↓············

# 提交容器变化打成一个新的镜像

docker commit -m "update index.html" mynginx myblog:v1.0.0

# 此时检查docker镜像会发现多了个myblog的镜像

[root@master-61 ~]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myblog v1.0.0 e73a9460b80d 17 seconds ago 188MB

nginx 1.26.0 94543a6c1aef 10 months ago 188MB

# 保存镜像为指定文件

docker save -o myblog.tar myblog:v1.0.0

# 删除多个镜像

docker rmi e73a9460b80d 94543a6c1aef

# 加载镜像

docker load -i myblog.tar

············↓↓分享镜像↓↓············

# 登录 docker hub(国内大概率登录超时)

docker login

# 重新给镜像打标签(akihana是Docker hup的用户名)

docker tag myblog:v1.0.0 akihana/myblog:v1.0.0

# 推送镜像

docker push akihana/myblog:v1.0.0

# 注:最好在制作一个最新的版本镜像标签latest

docker tag myblog:v1.0.0 akihana/myblog:latest

存储

两种方式,注意区分:

目录挂载:

-v /app/nghtml:/usr/share/nginx/html卷映射:

-v ngconf:/etc/nginx

docker run -d -p 99:80 \

-v /app/nghtml:/usr/share/nginx/html \

-v ngconf:/etc/nginx \

--name app03 \

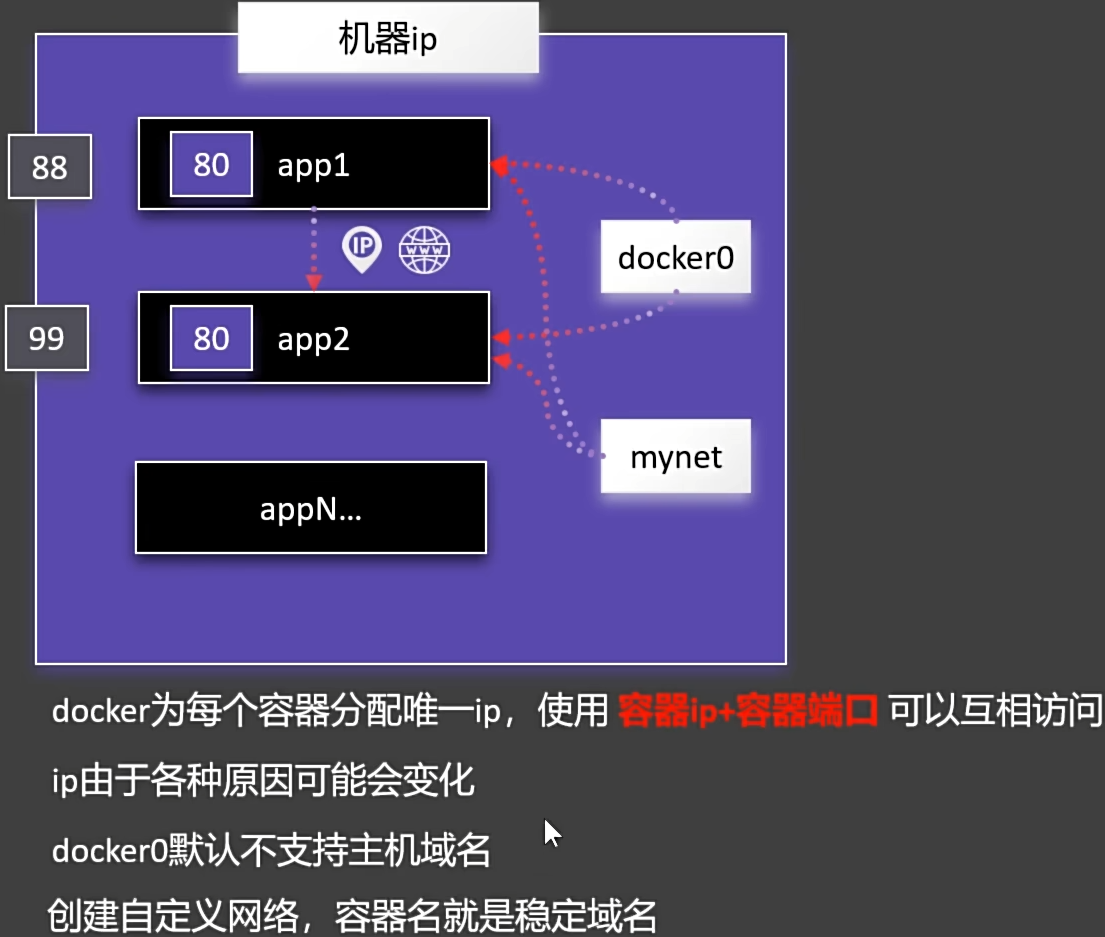

nginx网络

创建自定义网络,实现主机名作为稳定域名访问。

#获取 Docker 对象(容器、镜像、卷、网络等)的详细信息

docker container inspect mynginx

简写

docker inspect mynginx

#创建一个名为mynet的自定义docker网络域名

docker network create mynet

#创建名为app1、app2的两个新的NGINX容器并加入创建的mynet自定义网络中,端口分别映射为88,99

docker run -d -p 88:80 --name app1 --network mynet nginx

docker run -d -p 99:80 --name app2 --network mynet nginx

#查看创建的容器的IP地址

docker inspect app1

地址为"IPAddress": "172.18.0.2",

docker inspect app2

地址为"IPAddress": "172.18.0.3",

#进入app1的bash控制台,通过容器内部域名地址访问app2容器(curl http:<容器IP地址/容器名:宿主机端口号>)

[root@master-61 ~]#docker exec -it app1 bash

root@d7d7b9527c43:/# curl http://app2:80

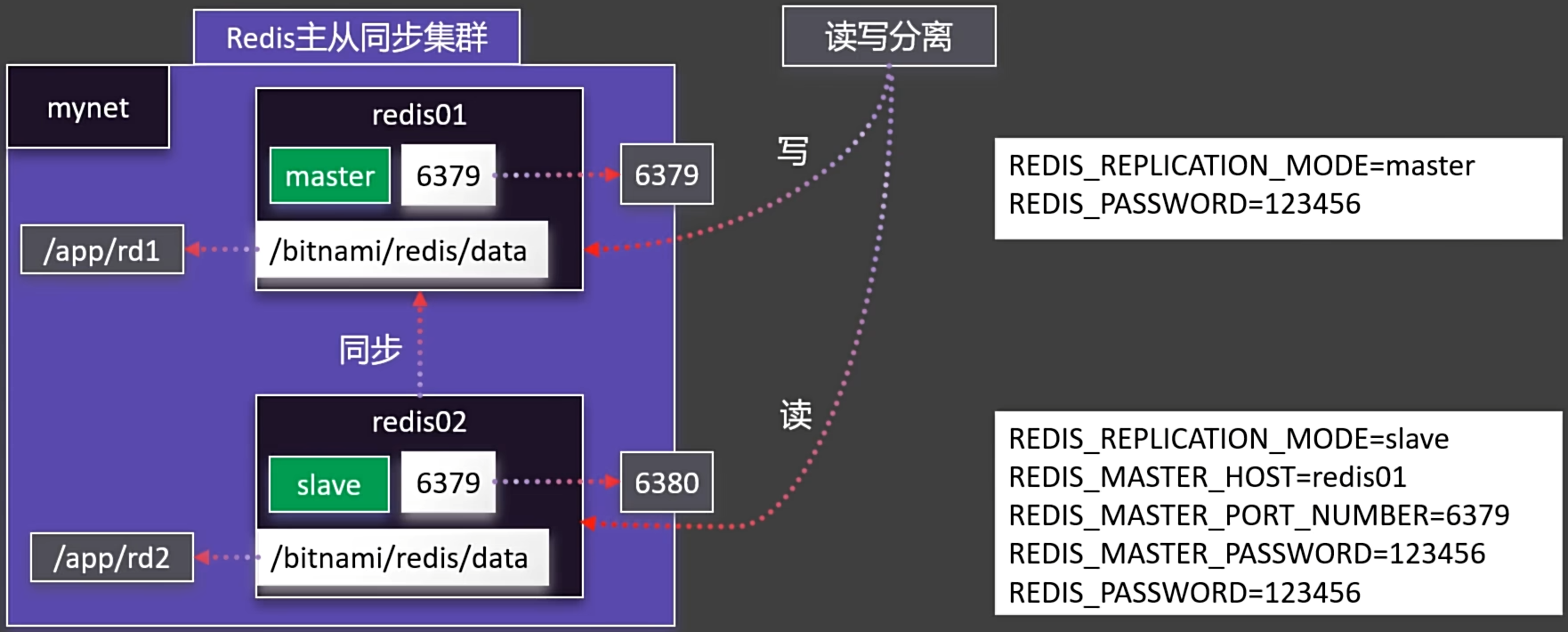

#注:80是宿主机的端口Redis主从同步集群

#自定义网络

docker network create mynet

#创建目录(为什么见遇到的问题)

mkdir /app/redis01

mkdir /app/redis02

#修改目录权限

chmod -R 777 /app/redis01

chmod -R 777 /app/redis02

#主节点

docker run -d -p 6379:6379 \

-v /app/redis01:/bitnami/redis/data \

-e REDIS_REPLICATION_MODE=master \

-e REDIS_PASSWORD=123456 \

--network mynet --name redis01 \

bitnami/redis

#从节点

docker run -d -p 6380:6379 \

-v /app/redis02:/bitnami/redis/data \

-e REDIS_REPLICATION_MODE=slave \

-e REDIS_MASTER_HOST=redis01 \

-e REDIS_MASTER_PORT_NUMBER=6379 \

-e REDIS_MASTER_PASSWORD=123456 \

-e REDIS_PASSWORD=123456 \

--network mynet --name redis02 \

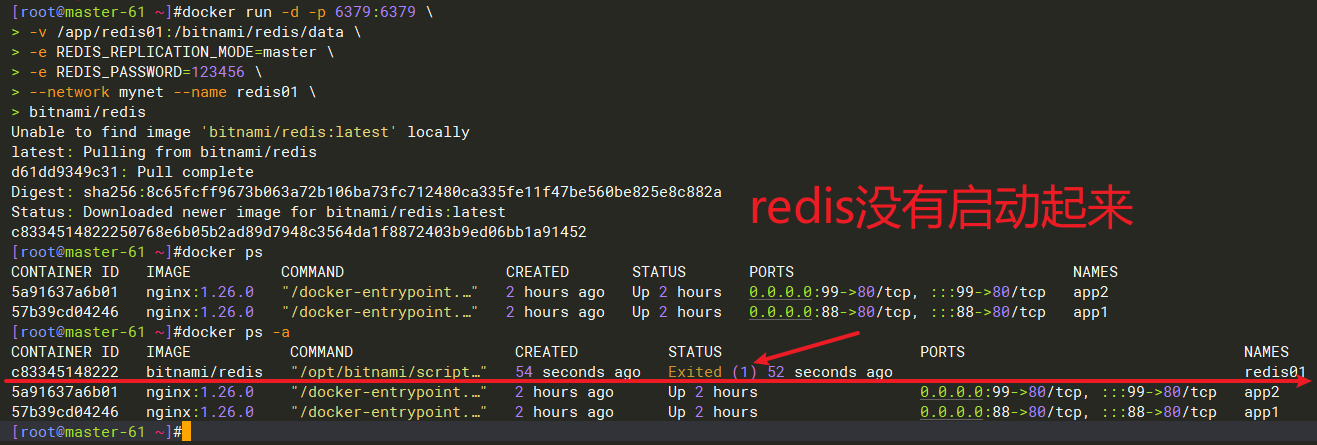

bitnami/redis遇到的问题

#查询日志排查问题

[root@master-61 ~]#docker logs c83

1:M 14 Apr 2025 11:26:40.936 # Can't open or create append-only dir appendonlydir: Permission denied(不能打开数据目录:权限拒绝)

#查看创建的/app/redis01目录的权限

[root@master-61 ~]#cd /app/

[root@master-61 /app]#ll

total 0

drwxr-xr-x 2 root root 24 Apr 14 16:58 nghtml

drwxr-xr-x 2 root root 6 Apr 14 19:26 redis01

#可以看到/app/redis01目录的权限只有root用户可读写执行,其他用户只能读执行。

#修改权限

chmod -R 777 /app/redis01

#重启容器redis01

docker restart redis01

#可以看到容器已经启起来了

[root@master-61 /app]#docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c83345148222 bitnami/redis "/opt/bitnami/script…" 20 minutes ago Up 4 seconds 0.0.0.0:6379->6379/tcp, :::6379->6379/tcp redis01

启动MySQL

docker run -d -p 3306:3306 \

-v /app/mysql_conf:/etc/mysql/conf.d \

-v /app/mysql_data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql01 \

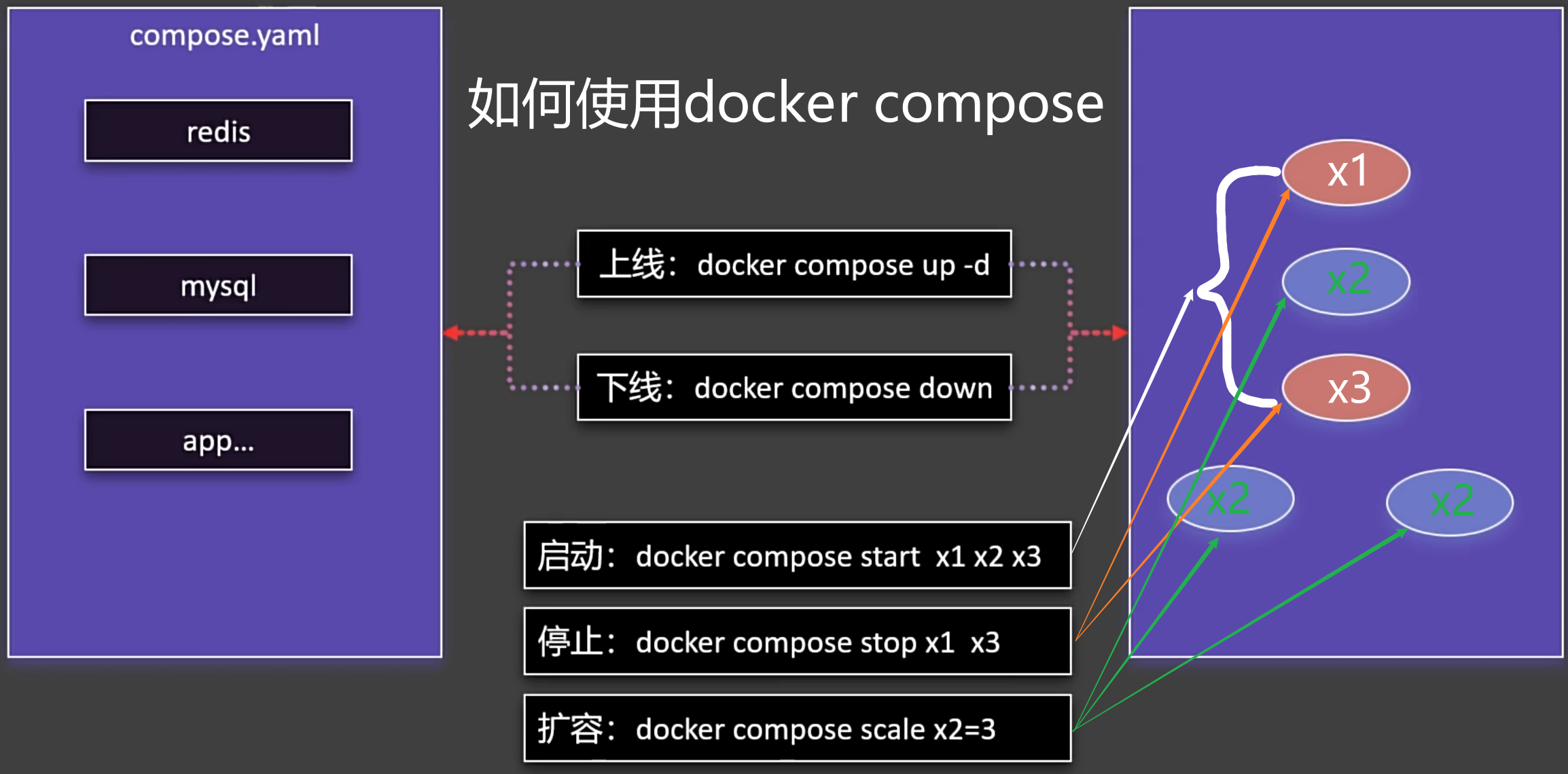

mysql:8.0.37-debianDocker Compose

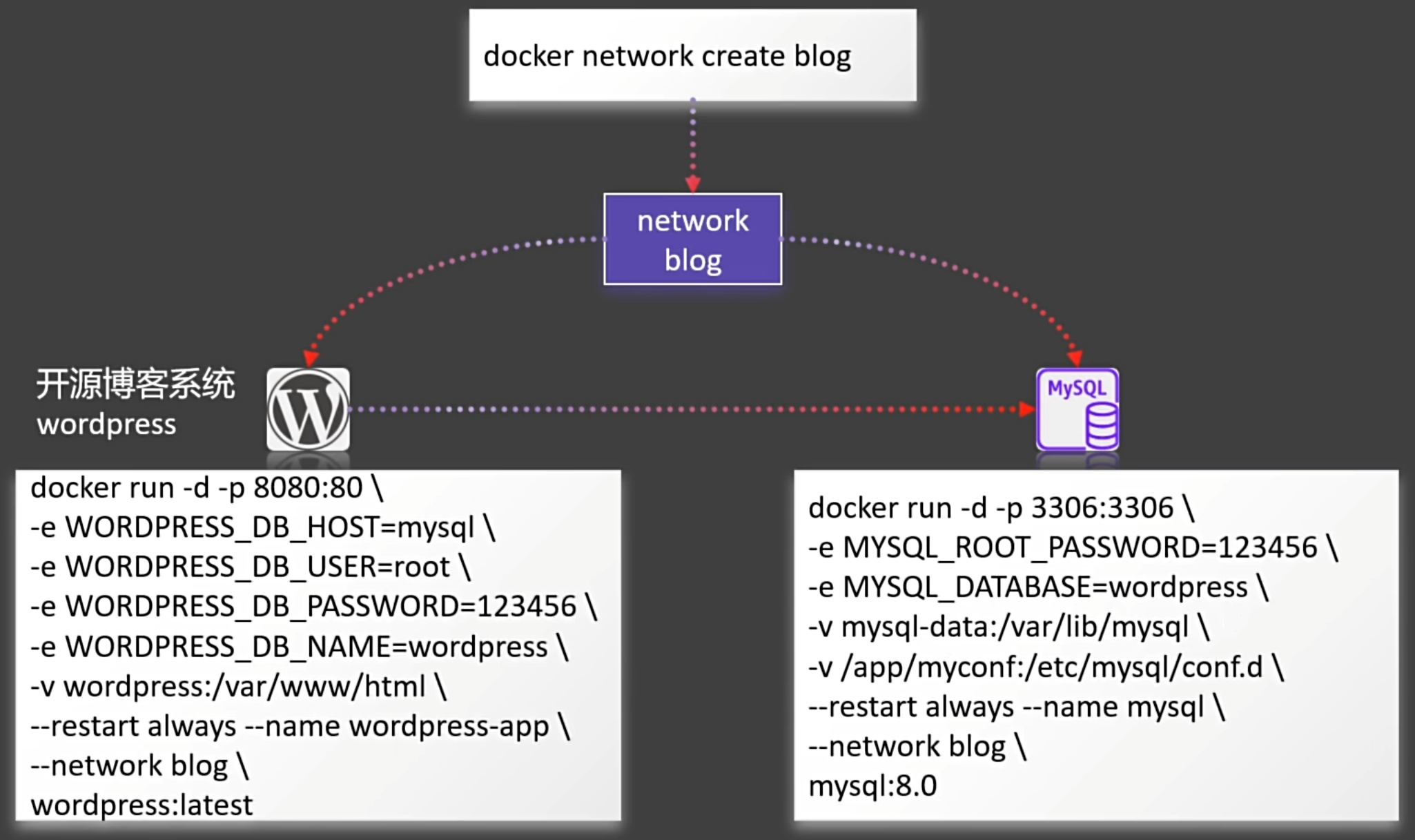

命令式安装wordpress博客

#创建网络

docker network create blog

#启动mysql

docker run -d -p 3306:3306 \

-e MYSQL_ROOT_PASSWORD=123456 \

-e MYSQL_DATABASE=wordpress \

-v mysql-data:/var/lib/mysql \

-v /app/myconf:/etc/mysql/conf.d \

--restart always --name mysql \

--network blog \

mysql:8.0

#启动wordpress

docker run -d -p 8080:80 \

-e WORDPRESS_DB_HOST=mysql \

-e WORDPRESS_DB_USER=root \

-e WORDPRESS_DB_PASSWORD=123456 \

-e WORDPRESS_DB_NAME=wordpress \

-v wordpress:/var/www/html \

--restart always --name wordpress-app \

--network blog \

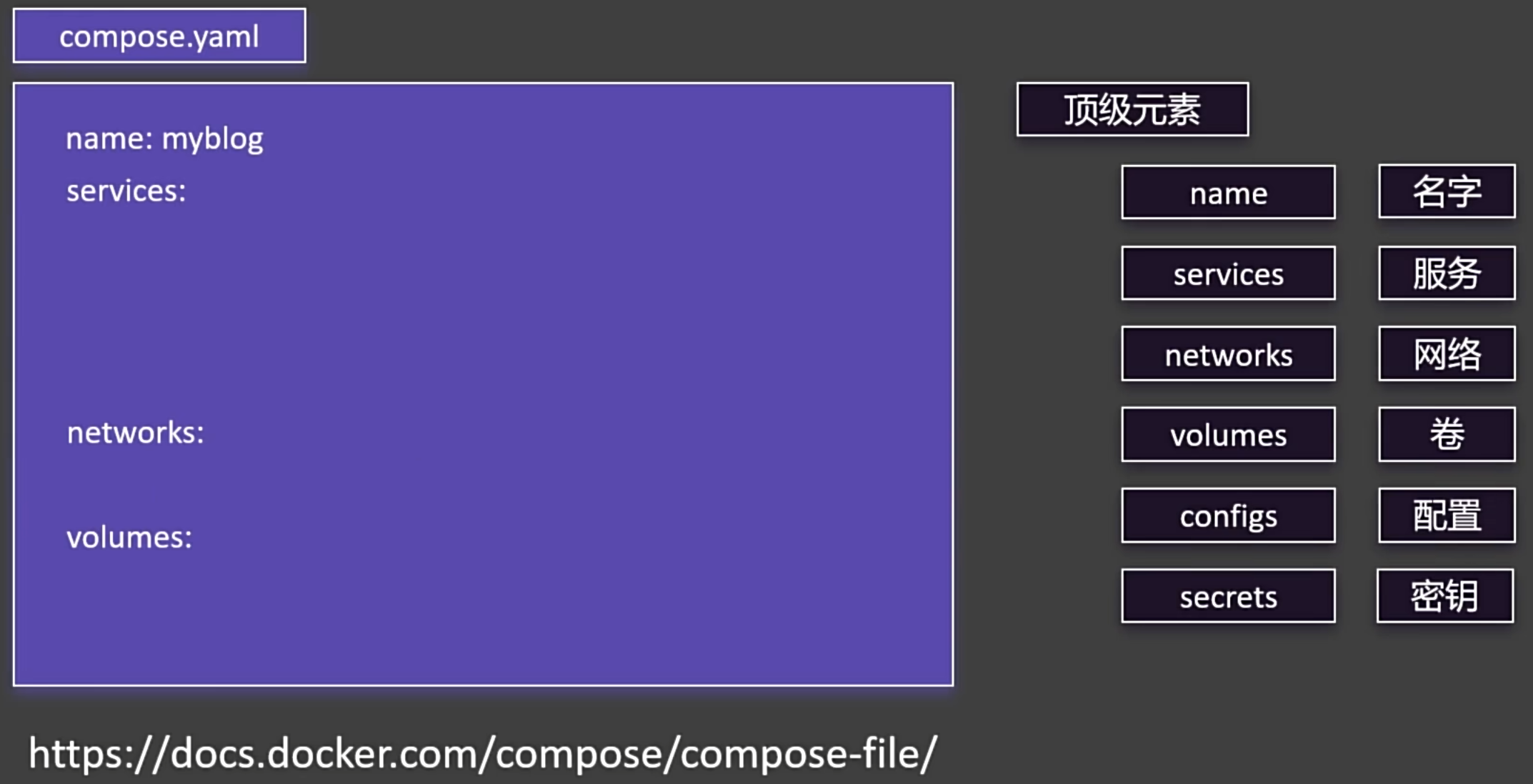

wordpress:latestcompose.yaml安装wordpress博客

name: myblog

services:

mysql:

container_name: mysql

image: mysql:8.0

ports:

- "3306:3306"

environment:

- MYSQL_ROOT_PASSWORD=123456

- MYSQL_DATABASE=wordpress

volumes:

- mysql-data:/var/lib/mysql

- /app/myconf:/etc/mysql/conf.d

restart: always

networks:

- blog

wordpress:

container_name: wordpress1

image: wordpress

ports:

- "8080:80"

environment:

WORDPRESS_DB_HOST: mysql

WORDPRESS_DB_USER: root

WORDPRESS_DB_PASSWORD: 123456

WORDPRESS_DB_NAME: wordpress

volumes:

- wordpress:/var/www/html

restart: always

networks:

- blog

depends_on:

- mysql

volumes:

mysql-data:

wordpress:

networks:

blog:特性

增量更新

修改 Docker Compose 文件。重新启动应用。只会触发修改项的重新启动。

#启动应用(修改yaml配置再启动docker compose只动修改的容器_增量更新)

docker compose -f compose.yaml up -d

数据不删

默认就算down了容器,所有挂载的卷不会被移除。比较安全

#下线应用(并未移除容器使用的卷)

docker compose -f compose.yaml down

#下线应用并删除所有镜像和卷

docker compose -f compose.yaml down --rmi all -v

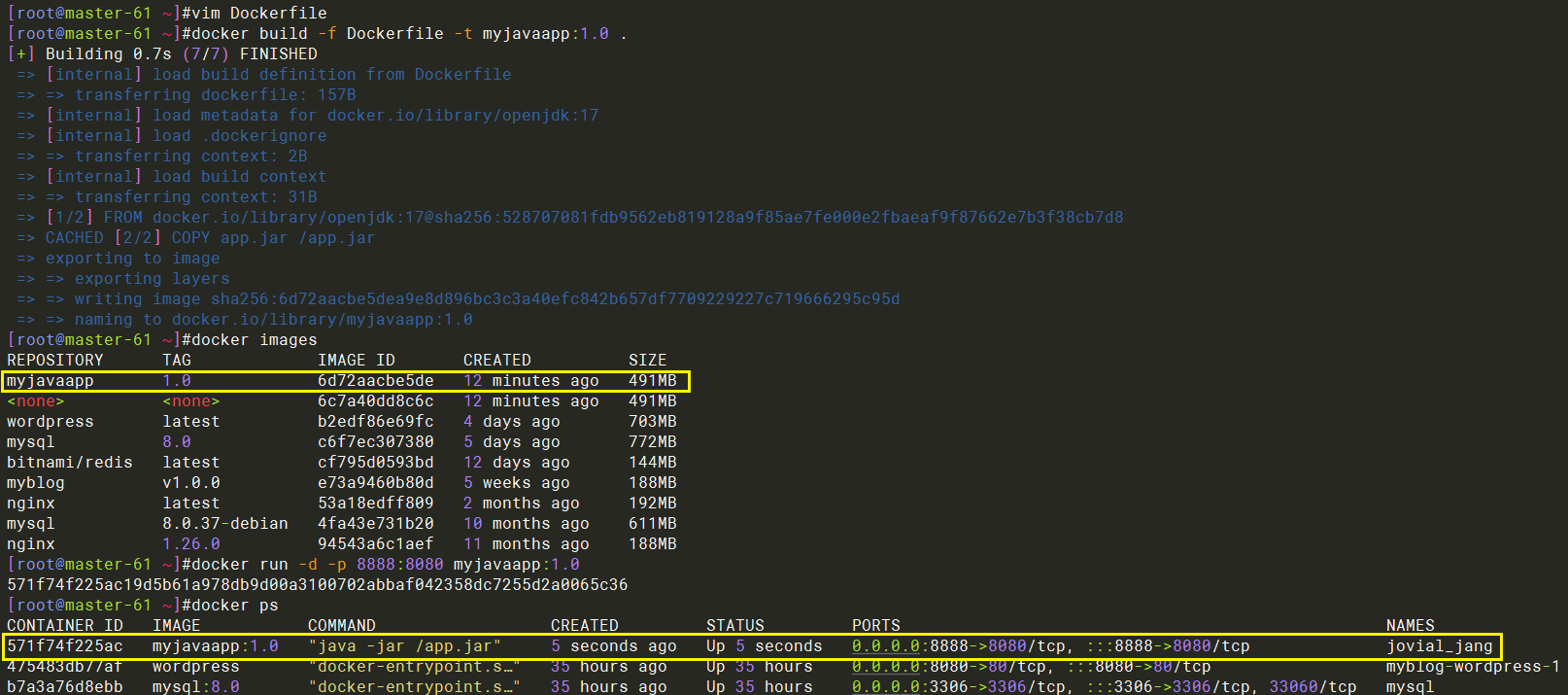

6. Dockerfile

#上传jar包

[root@master-61 ~]#rz

#编写Dockerfile

[root@master-61 ~]#vim Dockerfile

FROM openjdk:17

LABEL author=yanghq

COPY app.jar /app.jar

EXPOSE 8080

ENTRYPOINT ["java","-jar","/app.jar"]

#构建镜像

docker build -f Dockerfile -t myjavaapp:1.0 .

注:本命令后面的'.'非常重要,表示构建的上下文目录就是当前目录因为在当前目录'/root'下就有这个'app,jar'包,这条命令'COPY app.jar /app.jar'才能运行成功。

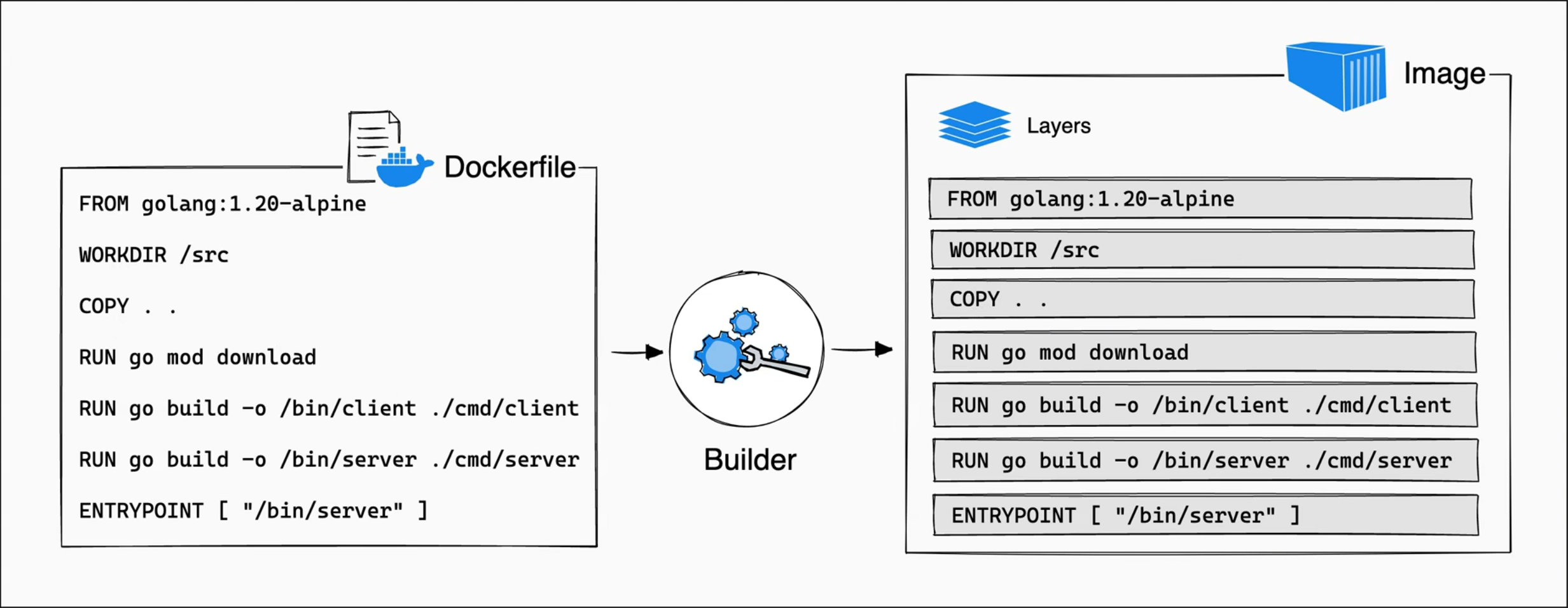

Dockerfile镜像分层存储机制

镜像分层存储机制:docker在低层想要存储一个镜像(Image)是分层存储的,而这些层产生于编写的Dockerfile文件当构建器(Bulider)要利用Dockerfile文件进行构建镜像的时候,由于文件里面编写了非常多的指令,每一行指令都有可能下载什么内容或者修改文件系统里面的什么东西,那每一行指令都会产生一个存储层,这个指令引起的内容变换就存在对应的层里面,这样的好处就是减轻磁盘存储压力。

Docker 镜像分层存储机制详解

分层构建原理

Docker 镜像由多个只读层(Layer)叠加组成,每个层对应 Dockerfile 中的一条指令(如

RUN、COPY、ADD等)。示例:

FROM ubuntu:20.04 # 基础层(Base Image) RUN apt-get update # 新增层(Apt 元数据变更) COPY app.py /opt # 新增层(文件系统变更)上述每条指令会生成一个独立的存储层,记录文件系统的差异(Diff)。

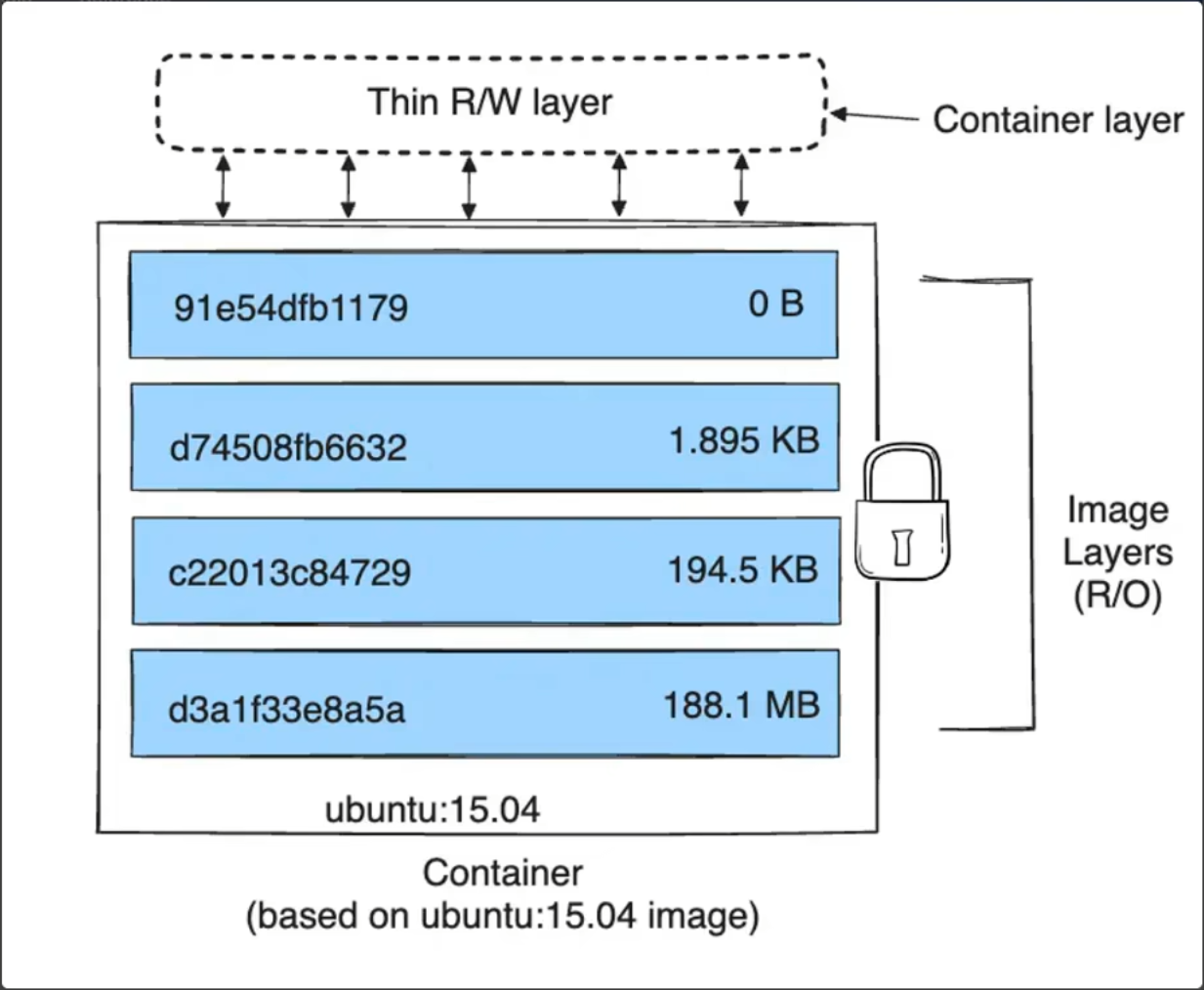

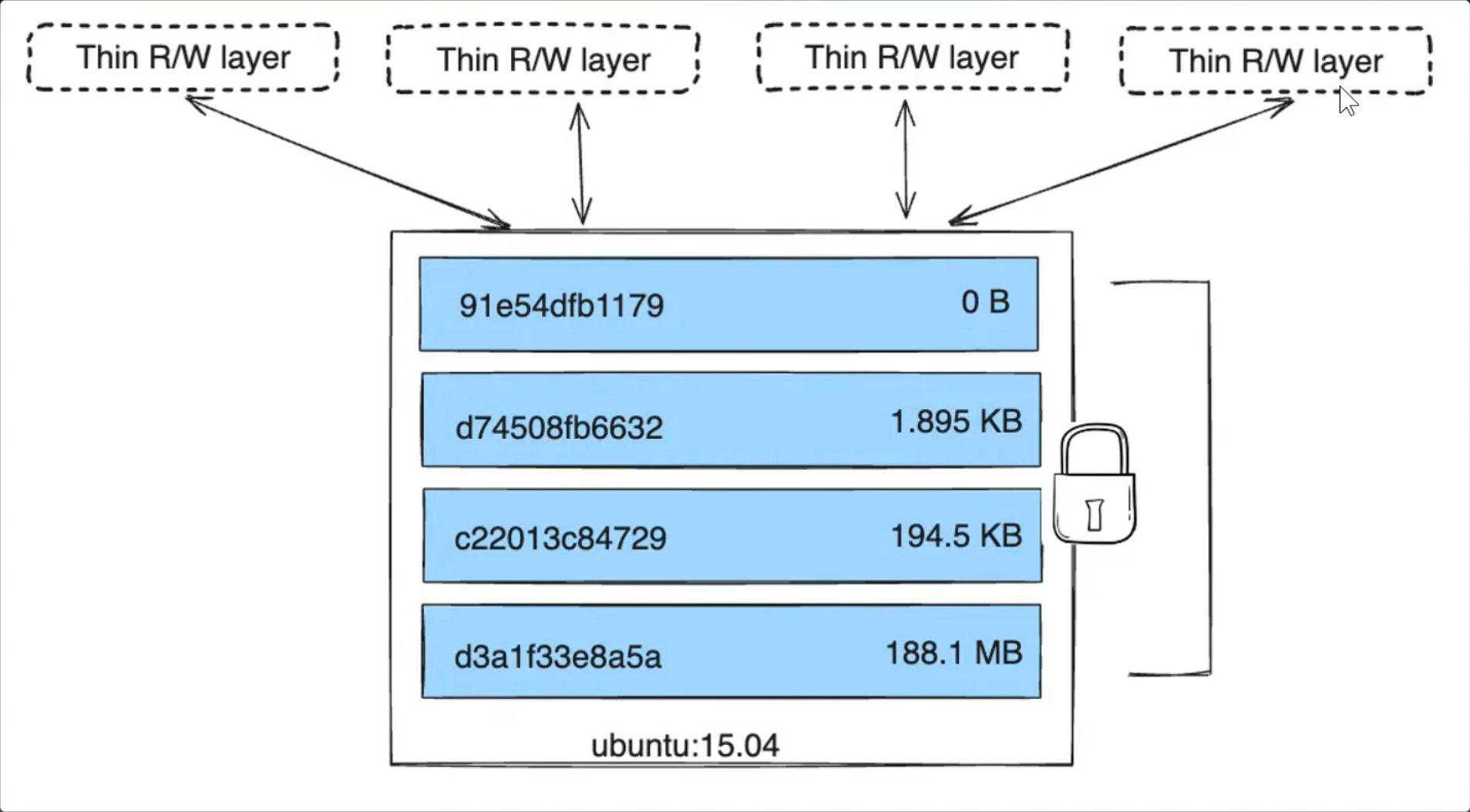

容器与镜像存储机制

分层存储的优势

高效复用:相同层可被多个镜像共享(如多个镜像基于

ubuntu:15.04时,只需存储一份基础层)。构建缓存:若 Dockerfile 指令未变化,构建时直接复用缓存层,加速构建过程。

最小化传输:推送/拉取镜像时,仅传输本地缺失的层,减少网络开销。

存储优化:分层通过写时复制(Copy-on-Write)实现,运行时容器仅在可写层(容器层)修改数据,避免重复占用空间。

层的生命周期

构建阶段:每层仅记录与上一层的差异,最终合并为统一的文件系统视图(通过联合文件系统如

overlay2)。运行时:容器在镜像层之上添加一个可写层,所有修改均在此层进行,原始镜像层保持不变。

最佳实践

减少层数:合并多条

RUN指令(如RUN apt-get update && apt-get install -y package)。利用

.dockerignore:避免无关文件被COPY/ADD引入,产生冗余层。多阶段构建:通过多阶段构建(Multi-stage)剔除中间层,减小最终镜像体积。

关键总结

Docker 的分层设计通过共享、缓存、按需加载机制,显著提升了存储效率、构建速度和运行时性能,是容器轻量化的核心保障。

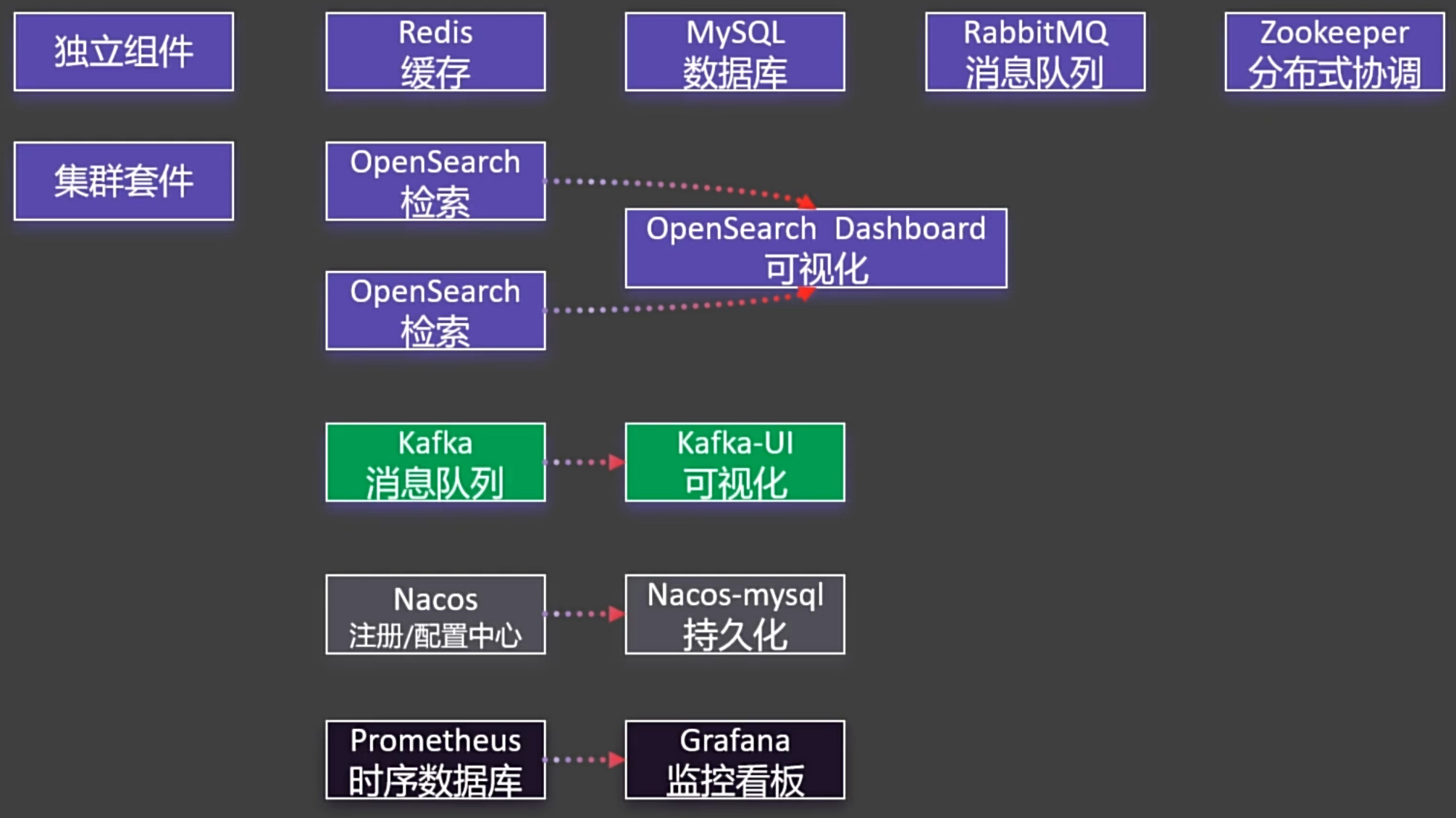

7. 附录 - 一键安装超多中间件

#Disable memory paging and swapping performance(关闭内存分页)

sudo swapoff -a

# Edit the sysctl config file(修改sysctl.conf配置文件)

sudo vi /etc/sysctl.conf

# Add a line to define the desired value

# or change the value if the key exists,

# and then save your changes.

vm.max_map_count=262144

# Reload the kernel parameters using sysctl(让配置文件生效)

sudo sysctl -p

# Verify that the change was applied by checking the value(也可以使用这条命令确认修改成功没有)

cat /proc/sys/vm/max_map_count7.1. yaml

注意:

将下面文件中

kafka的10.0.0.61改为你自己的服务器IP。所有容器都做了时间同步,这样容器的时间和linux主机的时间就一致了

准备一个 compose.yaml文件,内容如下:

name: devsoft

services:

redis:

image: bitnami/redis:latest

restart: always

container_name: redis

environment:

- REDIS_PASSWORD=123456

ports:

- '6379:6379'

volumes:

- redis-data:/bitnami/redis/data

- redis-conf:/opt/bitnami/redis/mounted-etc

- /etc/localtime:/etc/localtime:ro

mysql:

image: mysql:8.0.31

restart: always

container_name: mysql

environment:

- MYSQL_ROOT_PASSWORD=123456

ports:

- '3306:3306'

- '33060:33060'

volumes:

- mysql-conf:/etc/mysql/conf.d

- mysql-data:/var/lib/mysql

- /etc/localtime:/etc/localtime:ro

rabbit:

image: rabbitmq:3-management

restart: always

container_name: rabbitmq

ports:

- "5672:5672"

- "15672:15672"

environment:

- RABBITMQ_DEFAULT_USER=rabbit

- RABBITMQ_DEFAULT_PASS=rabbit

- RABBITMQ_DEFAULT_VHOST=dev

volumes:

- rabbit-data:/var/lib/rabbitmq

- rabbit-app:/etc/rabbitmq

- /etc/localtime:/etc/localtime:ro

opensearch-node1:

image: opensearchproject/opensearch:2.13.0

container_name: opensearch-node1

environment:

- cluster.name=opensearch-cluster # Name the cluster

- node.name=opensearch-node1 # Name the node that will run in this container

- discovery.seed_hosts=opensearch-node1,opensearch-node2 # Nodes to look for when discovering the cluster

- cluster.initial_cluster_manager_nodes=opensearch-node1,opensearch-node2 # Nodes eligibile to serve as cluster manager

- bootstrap.memory_lock=true # Disable JVM heap memory swapping

- "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m" # Set min and max JVM heap sizes to at least 50% of system RAM

- "DISABLE_INSTALL_DEMO_CONFIG=true" # Prevents execution of bundled demo script which installs demo certificates and security configurations to OpenSearch

- "DISABLE_SECURITY_PLUGIN=true" # Disables Security plugin

ulimits:

memlock:

soft: -1 # Set memlock to unlimited (no soft or hard limit)

hard: -1

nofile:

soft: 65536 # Maximum number of open files for the opensearch user - set to at least 65536

hard: 65536

volumes:

- opensearch-data1:/usr/share/opensearch/data # Creates volume called opensearch-data1 and mounts it to the container

- /etc/localtime:/etc/localtime:ro

ports:

- 9200:9200 # REST API

- 9600:9600 # Performance Analyzer

opensearch-node2:

image: opensearchproject/opensearch:2.13.0

container_name: opensearch-node2

environment:

- cluster.name=opensearch-cluster # Name the cluster

- node.name=opensearch-node2 # Name the node that will run in this container

- discovery.seed_hosts=opensearch-node1,opensearch-node2 # Nodes to look for when discovering the cluster

- cluster.initial_cluster_manager_nodes=opensearch-node1,opensearch-node2 # Nodes eligibile to serve as cluster manager

- bootstrap.memory_lock=true # Disable JVM heap memory swapping

- "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m" # Set min and max JVM heap sizes to at least 50% of system RAM

- "DISABLE_INSTALL_DEMO_CONFIG=true" # Prevents execution of bundled demo script which installs demo certificates and security configurations to OpenSearch

- "DISABLE_SECURITY_PLUGIN=true" # Disables Security plugin

ulimits:

memlock:

soft: -1 # Set memlock to unlimited (no soft or hard limit)

hard: -1

nofile:

soft: 65536 # Maximum number of open files for the opensearch user - set to at least 65536

hard: 65536

volumes:

- /etc/localtime:/etc/localtime:ro

- opensearch-data2:/usr/share/opensearch/data # Creates volume called opensearch-data2 and mounts it to the container

opensearch-dashboards:

image: opensearchproject/opensearch-dashboards:2.13.0

container_name: opensearch-dashboards

ports:

- 5601:5601 # Map host port 5601 to container port 5601

expose:

- "5601" # Expose port 5601 for web access to OpenSearch Dashboards

environment:

- 'OPENSEARCH_HOSTS=["http://opensearch-node1:9200","http://opensearch-node2:9200"]'

- "DISABLE_SECURITY_DASHBOARDS_PLUGIN=true" # disables security dashboards plugin in OpenSearch Dashboards

volumes:

- /etc/localtime:/etc/localtime:ro

zookeeper:

image: bitnami/zookeeper:3.9

container_name: zookeeper

restart: always

ports:

- "2181:2181"

volumes:

- "zookeeper_data:/bitnami"

- /etc/localtime:/etc/localtime:ro

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

kafka:

image: 'bitnami/kafka:3.4'

container_name: kafka

restart: always

hostname: kafka

ports:

- '9092:9092'

- '9094:9094'

environment:

- KAFKA_CFG_NODE_ID=0

- KAFKA_CFG_PROCESS_ROLES=controller,broker

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093,EXTERNAL://0.0.0.0:9094

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092,EXTERNAL://10.0.0.61:9094

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,EXTERNAL:PLAINTEXT,PLAINTEXT:PLAINTEXT

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=0@kafka:9093

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- ALLOW_PLAINTEXT_LISTENER=yes

- "KAFKA_HEAP_OPTS=-Xmx512m -Xms512m"

volumes:

- kafka-conf:/bitnami/kafka/config

- kafka-data:/bitnami/kafka/data

- /etc/localtime:/etc/localtime:ro

kafka-ui:

container_name: kafka-ui

image: provectuslabs/kafka-ui:latest

restart: always

ports:

- 8080:8080

environment:

DYNAMIC_CONFIG_ENABLED: true

KAFKA_CLUSTERS_0_NAME: kafka-dev

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: kafka:9092

volumes:

- kafkaui-app:/etc/kafkaui

- /etc/localtime:/etc/localtime:ro

nacos:

image: nacos/nacos-server:v2.3.1

container_name: nacos

ports:

- 8848:8848

- 9848:9848

environment:

- PREFER_HOST_MODE=hostname

- MODE=standalone

- JVM_XMX=512m

- JVM_XMS=512m

- SPRING_DATASOURCE_PLATFORM=mysql

- MYSQL_SERVICE_HOST=nacos-mysql

- MYSQL_SERVICE_DB_NAME=nacos_devtest

- MYSQL_SERVICE_PORT=3306

- MYSQL_SERVICE_USER=nacos

- MYSQL_SERVICE_PASSWORD=nacos

- MYSQL_SERVICE_DB_PARAM=characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=Asia/Shanghai&allowPublicKeyRetrieval=true

- NACOS_AUTH_IDENTITY_KEY=2222

- NACOS_AUTH_IDENTITY_VALUE=2xxx

- NACOS_AUTH_TOKEN=SecretKey012345678901234567890123456789012345678901234567890123456789

- NACOS_AUTH_ENABLE=true

volumes:

- /app/nacos/standalone-logs/:/home/nacos/logs

- /etc/localtime:/etc/localtime:ro

depends_on:

nacos-mysql:

condition: service_healthy

nacos-mysql:

container_name: nacos-mysql

build:

context: .

dockerfile_inline: |

FROM mysql:8.0.31

#ADD https://raw.githubusercontent.com/alibaba/nacos/2.3.2/distribution/conf/mysql-schema.sql /docker-entrypoint-initdb.d/nacos-mysql.sql(问题:通过 ADD 直接从 GitHub 下载 SQL 文件,若 GitHub 不可访问会导致构建失败(如你之前遇到的超时错误)。)

#修改如下:自己rz上传下载的mysql-schema.sql文件,再通过COPY复制到容器/docker-entrypoint-initdb.d/下并改名为nacos-mysql.sql

COPY ./mysql-schema.sql /docker-entrypoint-initdb.d/nacos-mysql.sql

RUN chown -R mysql:mysql /docker-entrypoint-initdb.d/nacos-mysql.sql

EXPOSE 3306

CMD ["mysqld", "--character-set-server=utf8mb4", "--collation-server=utf8mb4_unicode_ci"]

image: nacos/mysql:8.0.31

environment:

- MYSQL_ROOT_PASSWORD=root

- MYSQL_DATABASE=nacos_devtest

- MYSQL_USER=nacos

- MYSQL_PASSWORD=nacos

- LANG=C.UTF-8

volumes:

- nacos-mysqldata:/var/lib/mysql

- /etc/localtime:/etc/localtime:ro

ports:

- "13306:3306"

healthcheck:

test: [ "CMD", "mysqladmin" ,"ping", "-h", "localhost" ]

interval: 5s

timeout: 10s

retries: 10

prometheus:

image: prom/prometheus:v2.52.0

container_name: prometheus

restart: always

ports:

- 9090:9090

volumes:

- prometheus-data:/prometheus

- prometheus-conf:/etc/prometheus

- /etc/localtime:/etc/localtime:ro

grafana:

image: grafana/grafana:10.4.2

container_name: grafana

restart: always

ports:

- 3000:3000

volumes:

- grafana-data:/var/lib/grafana

- /etc/localtime:/etc/localtime:ro

volumes:

redis-data:

redis-conf:

mysql-conf:

mysql-data:

rabbit-data:

rabbit-app:

opensearch-data1:

opensearch-data2:

nacos-mysqldata:

zookeeper_data:

kafka-conf:

kafka-data:

kafkaui-app:

prometheus-data:

prometheus-conf:

grafana-data:7.2. 启动

# 在 compose.yaml 文件所在的目录下执行

docker compose up -d

# 等待启动所有容器tip:如果重启了服务器,可能有些容器会启动失败。再执行一遍

docker compose up -d即可。所有程序都可运行成功,并且不会丢失数据。请放心使用。